Modernizing Databases: Transitioning from EC2 to Managed Database Solutions

By Devangi Goswami, Piyush Jalan / Oct 18, 2024

In today's cloud-centric world, modernizing your database infrastructure is key to achieving scalability, security, and operational efficiency. As organizations continue to evolve, moving from traditional database setups to managed services like Amazon RDS represents a significant step forward.

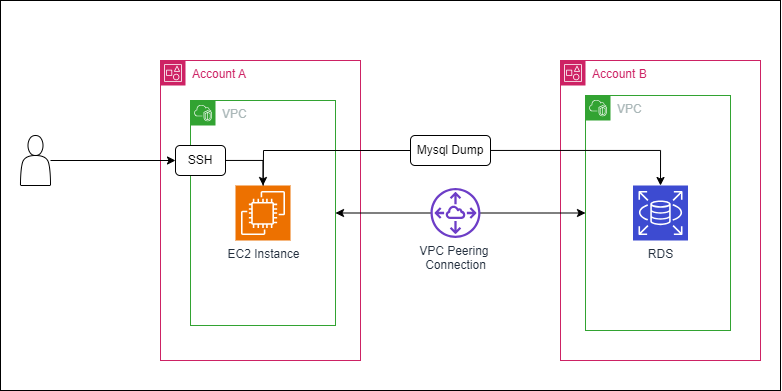

This guide covers a practical scenario where a MySQL database dump, including. mysql and .txt files, is restored from an EFS mounted on an EC2 instance to an RDS instance in a different AWS account. By transitioning from EC2-hosted databases to RDS, you are not only streamlining the restoration process but also embracing a modernized database approach that includes robust cutover strategies to minimize downtime and disruption.

The modernization process offers advantages in terms of scalability, performance, security, and manageability, making it a strategic shift toward future proofing your database infrastructure.

Why Modernize?

Traditional setups involving MySQL databases hosted on EC2 instances come with challenges. While they offer flexibility, they also require extensive management, including manual backups, patching, and scaling. The use of EFS as a storage solution adds another layer of complexity, particularly when dealing with large databases or cross-account transfers.

Management Overhead: EC2-hosted databases require manual backups, patching, and scaling, adding complexity, especially when using EFS for storage.

Improved Performance: RDS automates routine tasks, offering better performance and easier management.

Scaling: RDS provides automatic storage scaling and supports read replicas for distributing workloads, eliminating manual intervention.

High Availability: Multi-AZ deployments in RDS ensure high availability with automatic failover, reducing downtime risks.

Security: RDS offers encryption at rest and in transit, ensuring data protection. It integrates with AWS IAM for secure, role-based access control.

Cutover Strategies: Seamless migration with minimal disruption through phased cutovers and final sync.

Prerequisites

To successfully modernize your database and transition from an EC2-based setup to RDS, ensure the following prerequisites are met:

- MySQL Dump Files: The .txt and .mysql dump files should be located on your EFS, mounted on your EC2 instance.

- IAM Roles and Security Groups:

- EC2 Instance IAM Role: Must have permissions to access RDS instances in the target account.

- RDS Security Group: The security group attached to the RDS instance should allow inbound MySQL traffic (typically on port 3306) from the EC2 instance's IP.

- Cross-Account Role (Optional): If your setup uses cross-account roles, ensure the EC2 instance has permissions to assume a role in the target account.

- MySQL Credentials: MySQL user credentials with the necessary privileges to restore databases in the RDS instance.

- Network Connectivity: Ensure that your EC2 instance has network access to the RDS instance in the target account.

| Configuration Component | Description | Actions Required |

|---|---|---|

| Security Groups | ||

| EC2 Security Group | Ensure it allows outbound traffic on port 3306 (default MySQL port). | - Go to EC2 management console. - Edit the security group to allow outbound traffic on port 3306. |

| RDS Security Group | Update to allow inbound traffic from the EC2 instance's IP address or security group on port 3306. | - Edit the inbound rules of the RDS security group. |

| - Allow traffic from the EC2 instance's IP or security group on port 3306. | ||

| VPC Peering | Required if EC2 and RDS are in different VPCs, and you want to avoid using public IPs. | |

| Create VPC Peering Connection | Establish a peering connection between the VPCs. | - Go to the VPC console in the AWS account of the EC2 instance. - Create a peering connection to the VPC in the RDS account. - Accept the peering request in the RDS account. |

| Update Route Tables | Modify route tables in both VPCs to include routes to the peered VPC. | - Edit route tables in both VPCs. - Add routes to the peered VPC through the peering connection. |

| Update Security Groups | Ensure security groups in both VPCs allow necessary traffic between them. | - Update security group rules in both accounts to permit traffic between instances in the peered VPCs. |

The Modernization Process: Moving from EC2 with EFS to RDS

Step 1: Prepare the EC2 Instance and Verify the MySQL Dump Files

SSH into your EC2 instance

ssh -i your-key.pem ec2-user@your-ec2-ip-addressNavigate to the directory where your EFS is mounted:

cd /mnt/efs/path-to-dumpVerify the presence of your .txt and .mysql files:

ls -lhStep 2: Configure Connectivity Between EC2 and RDS

To ensure smooth communication between your EC2 instance and the RDS instance in another account:

- Security Group Configuration: Update the security group of the RDS instance to allow inbound connections from the IP address of your EC2 instance or its security group.

- Network Access: Verify that the EC2 instance can reach the RDS instance by pinging its endpoint:

ping your-rds-endpoint.rds.amazonaws.comStep 3: Automate the Transfer and Restoration

Modernization is not just about migrating data; it’s also about optimizing processes. By using an automated script to handle the restoration of large MySQL dump files, you reduce the risk of errors and increase efficiency. This script will manage the transfer and preprocessing of .txt files, ensuring a complete and accurate restoration in RDS.

#!/bin/bash

# VariablesDB_USER="UserName"DB_PASSWORD="UserPassword"DB_NAME="DBName"DB_HOST="endpoint"SQL_DIR="./"TMP_DIR="./tmp"TXT_FIELDS_TERMINATED_BY="^" # The delimiter in your .txt fileTXT_FIELDS_ENCLOSED_BY="" # No enclosing characterTXT_LINES_TERMINATED_BY="\n" # Lines terminated by newline

# Function to import a SQL file into the databaseimport_sql_file() { local file=$1 echo "Importing $file into $DB_NAME database" mysql -h"$DB_HOST" -u"$DB_USER" -p"$DB_PASSWORD" "$DB_NAME" < "$file" if [ $? -eq 0 ]; then echo "Successfully imported $file" else echo "Error importing $file" fi}

# Function to preprocess a TXT filepreprocess_txt_file() { local file=$1 local tmp_file="$TMP_DIR/$(basename "$file")" mkdir -p "$TMP_DIR"

# Preprocess the file: remove extra spaces, special characters, and blank lines sed 's/[[:space:]]\+/ /g' "$file" | tr -d '\000-\011\013-\037\177' | sed '/^$/d' > "$tmp_file"

echo "$tmp_file"}

# Function to import a TXT file into the databaseimport_txt_file() { local file=$1 local preprocessed_file=$(preprocess_txt_file "$file") local table_name=$(basename "$file" .txt) # Assuming table name is the same as file name without extension

echo "Loading data from $preprocessed_file into $table_name table in $DB_NAME database" mysql -h"$DB_HOST" -u"$DB_USER" -p"$DB_PASSWORD" "$DB_NAME" -e " LOAD DATA LOCAL INFILE '$preprocessed_file' INTO TABLE $table_name FIELDS TERMINATED BY '$TXT_FIELDS_TERMINATED_BY' ENCLOSED BY '$TXT_FIELDS_ENCLOSED_BY' LINES TERMINATED BY '$TXT_LINES_TERMINATED_BY';" if [ $? -eq 0 ]; then echo "Successfully imported $file" else echo "Error importing $file" fi}

# Loop through each SQL and TXT file in the directoryfor file in "$SQL_DIR"/*.sql "$SQL_DIR"/*.txtdo if [ -f "$file" ]; then if [[ $file == *.sql ]]; then import_sql_file "$file" elif [[ $file == *.txt ]]; then import_txt_file "$file" fi fidone

# Check if no files were foundif ! ls "$SQL_DIR"/*.sql "$SQL_DIR"/*.txt 1> /dev/null 2>&1; then echo "No SQL or TXT files found in $SQL_DIR"fi

# Clean up the temporary directoryrm -rf "$TMP_DIR"

- Set Variables: Define the MySQL credentials, database name, RDS host, and other parameters.

- Import SQL Files: The script uses the mysql command to import .sql files into the specified database.

- Preprocess and Import TXT Files: The script preprocesses .txt files by removing unwanted spaces and characters before importing them into corresponding tables.

Automating this task aligns with the principles of database modernization, where efficiency and reducing manual interventions are critical. In a traditional EC2 setup, such tasks would require manual handling, but in a modernized environment, automation plays a key role in enhancing productivity.

Save this script as restore_mysql.sh and make it executable:

chmod +x restore_mysql.shStep 4: Cutover Strategies for Minimal Downtime

One of the key aspects of database modernization is the implementation of effective cutover strategies. A well-planned cutover strategy ensures that the transition from the old system (EC2-hosted database) to the new one (RDS) is smooth, with minimal downtime and disruption.

Key Cutover Strategies:

- Phased Migration: Gradually migrate portions of the database or specific applications to RDS while keeping the original EC2-based database operational. This approach allows for thorough testing and validation before full migration.

- Blue-Green Deployment: Set up the RDS instance as the "green" environment while keeping the EC2-hosted database as the "blue" environment. After testing and validation, switch all traffic to the RDS instance, ensuring a smooth transition with minimal downtime.

- Final Cutover: Schedule the final cutover during off-peak hours, when the impact on users is minimal. Perform a final synchronization of the data, update DNS entries or application configurations to point to the new RDS instance, and decommission the old EC2-based database

Step 5: Execute the Restoration Script

Running the automated script not only transfers the database but also embodies the modernization effort by minimizing manual intervention. This approach aligns with the principles of database modernization, where automation and efficiency are prioritized.

./restore_mysql.shThis script will sequentially restore. mysql files and load data from .txt files into the specified database on the RDS instance.

Step 6: Verify the Restoration

After the script completes, log in to the RDS instance to verify the data:

mysql -h your-rds-endpoint.rds.amazonaws.com -u your-username -pCheck the database and table contents to ensure everything has been restored correctly.

USE your-database.SHOW TABLES;SELECT COUNT(*) FROM your_table;Step 7: Clean Up and Monitor

Cost Optimization: RDS offers various pricing models such as on-demand, reserved, and spot instances. This flexibility allows you to optimize costs based on your usage patterns, unlike the manual cost management in EC2 setups.

Monitoring the restoration process and cleaning up unnecessary files is part of maintaining a modern, lean infrastructure. Using AWS CloudWatch for monitoring ensures that any issues can be detected early, and AWS Performance Insights allows for continuous optimization of the database performance.

Conclusion

Migrating MySQL databases from an EC2 instance with EFS to Amazon RDS is more than just a data transfer—it's a significant step toward database modernization. This process reduces operational overhead, improves performance, enhances security, and ensures minimal downtime through well-planned cutover strategies. By embracing Amazon RDS, you ensure that your database environment is scalable, secure, and optimized for the future, all while aligning with modern best practices.