The Traditional Manufacturing Floor: A Metaphor for DataOps

By Troy Watt / Jun 09, 2023

Introduction

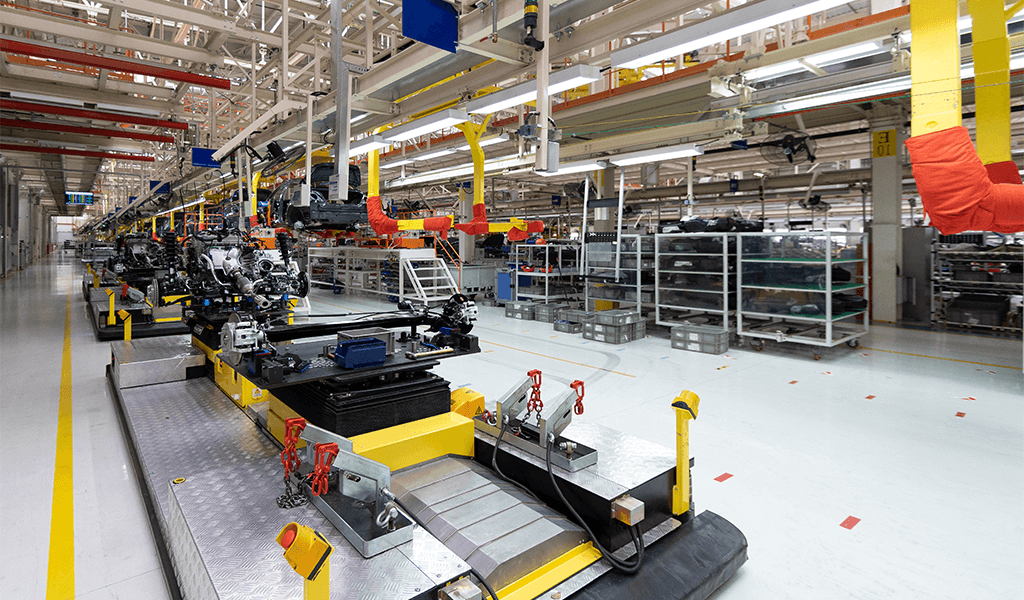

In the world of data management and operations, DataOps has emerged as a powerful methodology that brings together data engineering, data integration, and data quality practices. DataOps aims to streamline and optimize data workflows, enabling organizations to extract maximum value from their data assets. To better understand the principles and practices of DataOps, let's delve into the metaphor of a traditional manufacturing floor. By drawing parallels between the two, we can gain valuable insights into the key components, challenges, and benefits of implementing DataOps in modern data-driven organizations.

Assembly Line: Efficient Data Pipelines

On a traditional manufacturing floor, an assembly line represents a sequence of steps through which raw materials are transformed into finished products. Similarly, in DataOps, data pipelines serve as the assembly line for data transformation. These pipelines ensure that data flows smoothly, undergoes necessary transformations, and reaches its intended destination in a timely manner. Just as optimizing the flow of materials enhances productivity on a manufacturing floor, well-designed data pipelines enable efficient data processing and facilitate seamless integration with downstream systems.

Quality Control: Data Validation and Monitoring

Quality control plays a vital role in manufacturing to ensure that products meet predefined standards. Similarly, in DataOps, data validation and monitoring ensure the quality and integrity of data throughout its lifecycle. Robust data validation processes identify and rectify inconsistencies, inaccuracies, or anomalies in the data, improving its reliability and usability. By continuously monitoring data pipelines, organizations can proactively detect and resolve issues, ensuring that data remains accurate and reliable for downstream analysis and decision-making.

Standardization: Consistent Data Governance

Standardization is a critical aspect of manufacturing, enabling consistency in product specifications, components, and processes. In DataOps, standardized data governance practices establish guidelines for data storage, organization, naming conventions, and security protocols. This ensures that data is uniformly structured and accessible across the organization, enabling efficient data integration, collaboration, and analysis. Standardization helps eliminate data silos and facilitates a cohesive data ecosystem, just as it does on a manufacturing floor.

Continuous Improvement: Iterative Optimization

Manufacturing processes often undergo continuous improvement initiatives to enhance efficiency, reduce waste, and increase output. Similarly, DataOps embraces the principles of continuous improvement through iterative optimization. By leveraging feedback loops and metrics, DataOps teams identify bottlenecks, optimize workflows, and fine-tune data operations for improved performance. Continuous improvement enables organizations to adapt to changing data requirements, incorporate new technologies, and achieve higher levels of data-driven insights and outcomes.

Collaboration: Cross-functional Teams

On a manufacturing floor, different teams work together to ensure smooth operations and achieve production goals. Similarly, DataOps encourages collaboration between data engineers, data scientists, business analysts, and other stakeholders. Cross-functional teams leverage their expertise to design, develop, and maintain robust data pipelines, ensuring alignment with business objectives. Collaboration fosters a culture of data-driven decision-making, where stakeholders can access, analyze, and derive insights from data, driving innovation and competitiveness.

Scalability: Handling Increasing Data Volumes

In manufacturing, scalability refers to the ability to ramp up production to meet increasing demand. Likewise, in the data realm, scalability is a crucial aspect of DataOps. As data volumes continue to grow exponentially, DataOps provides the framework and tools to scale data pipelines and processing capabilities accordingly. Whether it involves adopting cloud-based infrastructure, implementing distributed computing frameworks, or leveraging containerization technologies, DataOps enables organizations to handle large-scale data operations with ease.

Conclusion

The metaphor of a traditional manufacturing floor offers valuable insights into the principles and practices of DataOps. As an assembly line ensures efficient production, data pipelines streamline data transformation. ion. By understanding the parallels between assembly lines, quality control, standardization, continuous improvement, collaboration, and scalability, we can navigate the challenges and harness the transformative power of DataOps. This principle of understanding enables our teams to design and optimize data pipelines, enforce data governance, and drive continuous improvement, all with the goal of the full potential of the enterprises data-driven success for organizations.