Multi-node Openstack (Newton) with Ceph as Storage

By Bikram Singh / Jan 21,2018

In this article we will learn to configure OpenStack Newton release to use Ceph Kraken 11.2.1 as a unified storage backend. What we will not cover is the basics of what Openstack is and how it works, same goes for Ceph. This blog purely focuses on Setting up private cloud based on Openstack and with Ceph as backend unified storage for Cinder, Glance and Nova ephemeral disks. This article will demonstrate step by step configuration guide to configure complete cloud.

Prerequisites

To complete this guide, you will need below Infrastructure: I have used baremetal servers to setup cloud however for Lab environment virtual machines can also be used.

Cloud Infrastructure

- CentOS x86_64 7.3 as base operating system for both openstack and Ceph Nodes.

- Openstack Newton release

- Ceph Kraken 11.2.1 release

- 1x Openstack Controller – Baremetal

- 4x Openstack Compute Nodes – Baremetal

- 1x Ceph Monitor – Baremetal

- 3x Ceph OSD nodes- Baremetal

- 1x Ceph Admin node – Virtual Machine

Hardware Specs of all the nodes used in this article.

I have detailed all the hardware specs of nodes that I am going to use for this guide however it is not mandatory to have node with below specs, you can use any combination of Compute, Network and Storage specs depending on your requirements.

High level Cloud Architecture

Below image shows the details of all the nodes with services running on them

Network & VLAN Schema

Below table shows the VLANs used and the purpose of each VLAN with corresponding subnets

Physical Connectivity

You can see in below physical topology diagram that we are using centralized Network Node to do all the East-west and North-South traffic routing and also does SNAT . Only Network Node(os-controller0) has access to Public/internet Network(VLAN 81). VXLAN is used as a tenant network type .

Configuration of Openstack

As part of this article we will be installing below openstack components . Well if you want to configure or add more openstack components you can just modify packstack answer file according to your requirements.

- OpenStack Compute (Nova)

- OpenStack Networking (Neutron)

- OpenStack Image (Glance)

- OpenStack Block Storage (Cinder)

- OpenStack Identity (Keystone) . I have used Keystone v3

- OpenStack Dashboar d (Horizon)

- OpenStack Object Storage (Swift)

- Configure Openstack nodes

To deploy openstack we used Packstack utility with customized answer-file and ran the packstack utility with generated answerfile on os-controller0 node.

Before we run packstack we need to change below settings on all the nodes in our cloud including Ceph

setenforce 0 ; sed -i 's/=enforcing/=disabled/g' /etc/sysconfig/selinux

systemctl disable firewalld

systemctl stop firewalld

systemctl stop NetworkManager

systemctl disable NetworkManager

yum update -y ; rebootCreate SSH keys and copy to all the compute nodes so that during packstack installation controller can login to all the compute nodes without password.

Run below commands on openstack controller node os-controller0.

ssh-keygen

ssh-copy-id [email protected]

ssh-copy-id [email protected]

ssh-copy-id [email protected]

ssh-copy-id [email protected]

yum install centos-release-openstack-newton -y

yum install openstack-packstack -y

yum install -y openstack-utils

packstack --gen-answer-file=/newton_answer.txtModify the answer-file to update needed configurations

and features you need to enable.

Before you run the packstack please run below command on all

the compute nodes to install openstack Newton repo.

yum install centos-release-openstack-newton -y

[root@os-controller0 ~]# packstack --answer-file=/newton-answer.txt

Welcome to the Packstack setup utility

The installation log file is available at: /var/tmp/packstack/20170825-203853-vKp16P/openstack-setup.log

Installing:

Clean Up [ DONE ]

Discovering ip protocol version [ DONE ]

Setting up ssh keys [ DONE ]

Preparing servers [ DONE ]

Pre installing Puppet and discovering hosts' details [ DONE ]

Preparing pre-install entries [ DONE ]

Setting up CACERT [ DONE ]

Preparing AMQP entries [ DONE ]

Preparing MariaDB entries [ DONE ]

Fixing Keystone LDAP config parameters to be undef if empty[ DONE ]

Preparing Keystone entries [ DONE ]

Preparing Glance entries [ DONE ]

Checking if the Cinder server has a cinder-volumes vg[ DONE ]

Preparing Cinder entries [ DONE ]

Preparing Nova API entries [ DONE ]

Creating ssh keys for Nova migration [ DONE ]

Gathering ssh host keys for Nova migration [ DONE ]

Preparing Nova Compute entries [ DONE ]

Preparing Nova Scheduler entries [ DONE ]

Preparing Nova VNC Proxy entries [ DONE ]

Preparing OpenStack Network-related Nova entries [ DONE ]

Preparing Nova Common entries [ DONE ]

Preparing Neutron LBaaS Agent entries [ DONE ]

Preparing Neutron API entries [ DONE ]

Preparing Neutron L3 entries [ DONE ]

Preparing Neutron L2 Agent entries [ DONE ]

Preparing Neutron DHCP Agent entries [ DONE ]

Preparing Neutron Metering Agent entries [ DONE ]

Checking if NetworkManager is enabled and running [ DONE ]

Preparing OpenStack Client entries [ DONE ]

Preparing Horizon entries [ DONE ]

Preparing Swift builder entries [ DONE ]

Preparing Swift proxy entries [ DONE ]

Preparing Swift storage entries [ DONE ]

Preparing Heat entries [ DONE ]

Preparing Heat CloudFormation API entries [ DONE ]

Preparing Gnocchi entries [ DONE ]

Preparing MongoDB entries [ DONE ]

Preparing Redis entries [ DONE ]

Preparing Ceilometer entries [ DONE ]

Preparing Aodh entries [ DONE ]

Preparing Trove entries [ DONE ]

Preparing Sahara entries [ DONE ]

Preparing Nagios server entries [ DONE ]

Preparing Nagios host entries [ DONE ]

Preparing Puppet manifests [ DONE ]

Copying Puppet modules and manifests [ DONE ]

Applying 10.9.80.50_controller.pp

10.9.80.50_controller.pp: [ DONE ]

Applying 10.9.80.50_network.pp

10.9.80.50_network.pp: [ DONE ]

Applying 10.9.80.60_compute.pp

Applying 10.9.80.61_compute.pp

Applying 10.9.80.51_compute.pp

Applying 10.9.80.52_compute.pp

10.9.80.60_compute.pp: [ DONE ]

10.9.80.61_compute.pp: [ DONE ]

10.9.80.51_compute.pp: [ DONE ]

10.9.80.52_compute.pp: [ DONE ]

Applying Puppet manifests [ DONE ]

Finalizing [ DONE ]

**** Installation completed successfully ******

Additional information:

* Time synchronization installation was skipped. Please note that unsynchronized time on server instances might be problem for some OpenStack components.

* File /root/keystonerc_admin has been created on OpenStack client host 10.9.80.50. To use the command line tools you need to source the file.

* To access the OpenStack Dashboard browse to https://10.9.80.50/dashboard .

Please, find your login credentials stored in the keystonerc_admin in your home directory.

* To use Nagios, browse to http://10.9.80.50/nagios username: nagiosadmin, password: c5cf26b9c2854cbc

* The installation log file is available at: /var/tmp/packstack/20170825-203853-vKp16P/openstack-setup.log

* The generated manifests are available at: /var/tmp/packstack/20170825-203853-vKp16P/manifests

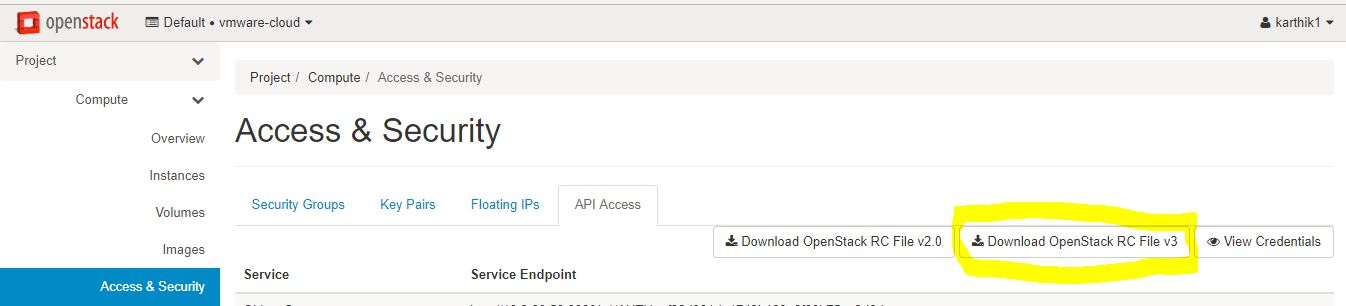

[root@os-controller0 ~]#You can find admin username password in automatically generated file”keystonerc_admin”

[root@os-controller0 ~]# cat keystonerc_admin

unset OS_SERVICE_TOKEN

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_AUTH_URL=http://10.9.80.50:5000/v3

export PS1='[\u@\h \W(keystone_admin)]\$ '

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_IDENTITY_API_VERSION=3

[root@os-controller0 ~]#By default in Horizon UI multi-domain support is disabled. Please change setting in local_settings file at location /etc/openstack-dashboard/local_settings as per below. Keystone v3 uses new policy file which can be downloaded from below link https://github.com/openstack/keystone/blob/master/etc/policy.v3cloudsample.json Create a keystone Policy file named policy.v3cloudsample.json at location /etc/openstack-dashboard/ Change the file permissions.

chown root:apache policy.v3cloudsample.json

Change below to enable Multi-domain support in Horzon UI

vi /etc/openstack-dashboard/local_settings

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = 'Default'

POLICY_FILES = {

'identity': 'policy.v3cloudsample.json',

}To make Domain option visible in Identity section in Horizon UI we need to make admin user as cloud_admin by assigning admin role for domain default using below command

openstack role add --domain default --user admin adminAlso we need to specify admin_domain_id in policy.v3cloudsample.json .

Change below line

"cloud_admin": "role:admin and (is_admin_project:True or domain_id:admin_domain_id)",

to

"cloud_admin": "role:admin and (is_admin_project:True or domain_id:default)",Restart Horizon service for changes to take effect

service httpd restart

Setup Ceph Cluster

To complete this guide, you will need below storage nodes:

Nodes used in the Ceph cluster

1x Ceph Monitor – Baremetal

3x Ceph OSD nodes – Baremetal

1x Ceph Admin node – Virtual Machine

For HA minimum 3 Ceph monitors are recommended, however I am using 1 ceph monitor . We will use ceph-deploy utility to install ceph cluster by installing ceph-deploy on a separate VM named ceph-admin. To check the hardware specs and connectivity please check above openstack section.

Installing Ceph-deploy on admin node

Add Ceph Repo to the ceph-admin node

vi /etc/yum.repos.d/ceph.repo

[ceph-noarch]

name=Ceph noarch packages

baseurl=http://download.ceph.com/rpm-kraken/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.ascAdd cephuser on all the ceph nodes including admin node and set password less login

useradd -d /home/cephuser -m cephuser

passwd cephuser

echo "cephuser ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephuser

chmod 0440 /etc/sudoers.d/cephuserOn ceph-admin node edit the sudoers file using visudo and comment the line “Defaults requiretty” or use this command:

sed -i s'/Defaults requiretty/#Defaults requiretty'/g /etc/sudoers

Create SSH key on ceph-admin node and copy to all the ceph nodes

ssh-keygen

ssh-copy-id [email protected]

ssh-copy-id [email protected]

ssh-copy-id [email protected]

ssh-copy-id [email protected]Modify the ~/.ssh/config file of your ceph-deploy admin node so that ceph-deploy can log in to Ceph nodes as the user you created without requiring you to specify –username each time you execute ceph-deploy

vi ~/.ssh/config

Host ceph-mon1.scaleon.io

Hostname ceph-mon1.scaleon.io

User cephuser

Host ceph-osd0.scaleon.io

Hostname ceph-osd0.scaleon.io

User cephuser

Host ceph-osd1.scaleon.io

Hostname ceph-osd1.scaleon.io

User cephuser

Host ceph-osd2.scaleon.io

Hostname ceph-osd2.scaleon.io

User cephuserInstall Ceph-deploy on ceph-admin node

sudo yum install ceph-deploy

Create Cluster

Run below commands on ceph-admin node to create initial cluster

mkdir ceph-cluster

cd ceph-cluster

ceph-deploy new ceph-mon1.scaleon.io

Update ceph.conf to reflect public and cluster network

Below is my ceph.conf . Default replica is 3 however I am using 2

cat /etc/ceph/ceph.conf

[global]

fsid = 9c1c1ed7-cbc1-4055-a633-70b32dffad29

mon_initial_members = ceph-mon1

mon_host = 10.9.80.58

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public network = 10.9.80.0/24

cluster network = 10.9.131.0/24

#Choose reasonable numbers for number of replicas and placement groups.

osd pool default size = 2 # Write an object 2 times

osd pool default min size = 1 # Allow writing 1 copy in a degraded state

osd pool default pg num = 256

osd pool default pgp num = 256

#Choose a reasonable crush leaf type

#0 for a 1-node cluster.

#1 for a multi node cluster in a single rack

#2 for a multi node, multi chassis cluster with multiple hosts in a chassis

#3 for a multi node cluster with hosts across racks, etc.

osd crush chooseleaf type = 1

Deploy Ceph on all the nodes from admin node . Ceph-deploy will download required ceph packages on all the nodes and install ceph.

ceph-deploy install ceph-mon1.scaleon.io ceph-osd0.scaleon.io ceph-osd1.scaleon.io ceph-osd2.scaleon.io

Preparing OSD and Journal disks on ceph-osd nodes

On each Ceph OSD node I have below configuration

- 1 x 500GB OS Disk

- 1 x 256 GB SSD for Journal

- 1x 256 GB SSD for caching(I have not enabled caching as of now. Will cover in other article)

- 3 x 2TB for OSD

Run below commands on all the ceph-osd nodes to format disks

Journal disk

parted -s /dev/sdb mklabel gpt

parted -s /dev/sdb mkpart primary 0% 33%

parted -s /dev/sdb mkpart primary 34% 66%

parted -s /dev/sdb mkpart primary 67% 100%OSDs

parted -s /dev/sdc mklabel gpt

parted -s /dev/sdc mkpart primary xfs 0% 100%

mkfs.xfs /dev/sdc -f

parted -s /dev/sdd mklabel gpt

parted -s /dev/sdd mkpart primary xfs 0% 100%

mkfs.xfs /dev/sdd -f

parted -s /dev/sde mklabel gpt

parted -s /dev/sde mkpart primary xfs 0% 100%

mkfs.xfs /dev/sde -fPreparing and Adding OSDs to the cluster

ceph-deploy osd create ceph-osd0.scaleon.io:sdc:/dev/sdb1 ceph-osd0.scaleon.io:sdd:/dev/sdb2 ceph-osd0.scaleon.io:sde:/dev/sdb3

ceph-deploy osd create ceph-osd1.scaleon.io:sdc:/dev/sdb1 ceph-osd1.scaleon.io:sdd:/dev/sdb2 ceph-osd1.scaleon.io:sde:/dev/sdb3

ceph-deploy osd create ceph-osd2.scaleon.io:sdc:/dev/sdb1 ceph-osd2.scaleon.io:sdd:/dev/sdb2 ceph-osd2.scaleon.io:sde:/dev/sdb3If OSDs fail to come up and below logs is obsereved follow these steps to resolve the issue

Change permission of Journal disks if below error is seen

** ERROR: error creating empty object store in /var/lib/ceph/tmp/mnt.cM4pRP: (13) Permission denied

filestore(/var/lib/ceph/tmp/mnt.cM4pRP) mkjournal error creating journal on /var/lib/ceph/tmp/mnt.cM4pRP/journal: (13) Permission denied

Change the ownership of journal disks on all ceph-osd nodes and then activate the OSDs

chown ceph:ceph /dev/sdb1

chown ceph:ceph /dev/sdb2

chown ceph:ceph /dev/sdb3

Then activate the disks by running below command from ceph-admin node

ceph-deploy osd activate ceph-osd0.scaleon.io:/dev/sdc1:/dev/sdb1 ceph-osd0.scaleon.io:/dev/sdd1:/dev/sdb2 ceph-osd0.scaleon.io:/dev/sde1:/dev/sdb3

ceph-deploy osd activate ceph-osd1.scaleon.io:/dev/sdc1:/dev/sdb1 ceph-osd1.scaleon.io:/dev/sdd1:/dev/sdb2 ceph-osd1.scaleon.io:/dev/sde1:/dev/sdb3

ceph-deploy osd activate ceph-osd2.scaleon.io:/dev/sdc1:/dev/sdb1 ceph-osd2.scaleon.io:/dev/sdd1:/dev/sdb2 ceph-osd2.scaleon.io:/dev/sde1:/dev/sdb3

Verify Ceph cluster status and health

[root@ceph-mon1 ~]# sudo ceph --version

ceph version 11.2.1 (e0354f9d3b1eea1d75a7dd487ba8098311be38a7)

[root@ceph-mon1 ~]#

[root@ceph-mon1 ~]# ceph health

HEALTH_OK

[root@ceph-mon1 ~]# ceph status

cluster 9c1c1ed7-cbc1-4055-a633-70b32dffad29

health HEALTH_OK

monmap e2: 1 mons at {ceph-mon1=10.9.80.58:6789/0}

election epoch 9, quorum 0 ceph-mon1

mgr active: ceph-mon1.scaleon.io

osdmap e161: 9 osds: 9 up, 9 in

flags sortbitwise,require_jewel_osds,require_kraken_osds

pgmap v338405: 1124 pgs, 8 pools, 223 GB data, 45209 objects

446 GB used, 16307 GB / 16754 GB avail

1124 active+clean

client io 72339 B/s wr, 0 op/s rd, 4 op/s wr

[root@ceph-mon1 ~]# ceph mon_status

{"name":"ceph-mon1","rank":0,"state":"leader","election_epoch":9,"quorum":[0],"features":{"required_con":"9025616074522624","required_mon":["kraken"],"quorum_con":"1152921504336314367","quorum_mon":["kraken"]},"outside_quorum":[],"extra_probe_peers":[],"sync_provider":[],"monmap":{"epoch":2,"fsid":"9c1c1ed7-cbc1-4055-a633-70b32dffad29","modified":"2017-08-26 18:37:51.589258","created":"2017-08-26 17:29:14.632576","features":{"persistent":["kraken"],"optional":[]},"mons":[{"rank":0,"name":"ceph-mon1","addr":"10.9.80.58:6789\/0","public_addr":"10.9.80.58:6789\/0"}]}}

[root@ceph-mon1 ~]# ceph quorum_status

{"election_epoch":9,"quorum":[0],"quorum_names":["ceph-mon1"],"quorum_leader_name":"ceph-mon1","monmap":{"epoch":2,"fsid":"9c1c1ed7-cbc1-4055-a633-70b32dffad29","modified":"2017-08-26 18:37:51.589258","created":"2017-08-26 17:29:14.632576","features":{"persistent":["kraken"],"optional":[]},"mons":[{"rank":0,"name":"ceph-mon1","addr":"10.9.80.58:6789\/0","public_addr":"10.9.80.58:6789\/0"}]}}

[root@ceph-mon1 ~]# ceph osd tree

ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY

-1 16.36194 root default

-2 5.45398 host ceph-osd0

0 1.81799 osd.0 up 1.00000 1.00000

1 1.81799 osd.1 up 1.00000 1.00000

2 1.81799 osd.2 up 1.00000 1.00000

-3 5.45398 host ceph-osd1

3 1.81799 osd.3 up 1.00000 1.00000

4 1.81799 osd.4 up 1.00000 1.00000

5 1.81799 osd.5 up 1.00000 1.00000

-4 5.45398 host ceph-osd2

6 1.81799 osd.6 up 1.00000 1.00000

7 1.81799 osd.7 up 1.00000 1.00000

8 1.81799 osd.8 up 1.00000 1.00000

[root@ceph-mon1 ~]# ceph osd stat

osdmap e161: 9 osds: 9 up, 9 in

flags sortbitwise,require_jewel_osds,require_kraken_osdsI have also installed opensource web based ceph monitoring tool called ceph-dash on ceph-mon1 . Please refer below link for details about the tool

https://github.com/Crapworks/ceph-dash

git clone https://github.com/Crapworks/ceph-dash.git

mkdir ceph-dash

cd ceph-dash/

nohup ./ceph-dash.py &By default ceph-dash application runs on port 5000

Ceph Integration with Openstack

In this section we will discuss on how to integrate openstack Glance, Cinder and Nova(ephemeral disk) with Ceph

Ceph as backend for Glance(images)

Glance is the image service and by default images are stored locally on controllers and then copied to compute hosts when requested. The compute hosts cache the images but they need to be copied again, every time an image is updated. Ceph provides backend for Glance allowing images to be stored in Ceph, instead of locally on controller and compute nodes.

Install below ceph packages on all the openstack nodes

yum install python-rbd ceph-common -y

We will create dedicate pool for Glance, Cinder and Nova. Run below command on ceph-mon1 node to create pool for glance

sudo ceph osd pool create glance-images 128

Need to create Ceph client for glance with keyring for authentication

sudo ceph auth get-or-create client.glance-images mon 'allow r' osd 'allow class-read object_prefix rdb_children, allow rwx pool=glance-images' -o /etc/ceph/ceph.client.glance-images.keyring

Copy Ceph keyring for glance and ceph.conf file to openstack controller node.

scp /etc/ceph/ceph.client.glance-images.keyring [email protected]:/etc/ceph/

scp /etc/ceph/ceph.conf [email protected]:/etc/ceph/

Set the appropriate permissions on the keyring on controller node

sudo chgrp glance /etc/ceph/ceph.client.glance-images.keyring

sudo chmod 0640 /etc/ceph/ceph.client.glance-images.keyring

Modify /etc/ceph/ceph.conf on openstack controller node and add the following lines:

[client.glance-images]

keyring = /etc/ceph/ceph.client.glance-images.keyringOn openstack controller , edit the file /etc/glance/glance-api.conf to reflect the changes as per below configuration

stores = file,http,swift,rbd

default_store = rbd

rbd_store_pool = glance-images

rbd_store_user = glance-images

rbd_store_ceph_conf = /etc/ceph/ceph.conf

Restart the glance-api service

openstack-service restart openstack-glance-api

Lets verify if images are getting stored on ceph

Download a CentOS cloud image

wget https://cloud.centos.org/centos/7/images/CentOS-7-x86_64-GenericCloud-1706.qcow2

Create glance image from downloaded CentOS cloud image

openstack image create --disk-format qcow2 --container-format bare --public --file CentOS-7-x86_64-GenericCloud-1706.qcow2 CentOS_7x64

+------------------+--------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------------+--------------------------------------------------------------------------------------------------------------------------------+

| checksum | c03e55c22b6fb2127e7de391b488d8d6 |

| container_format | bare |

| created_at | 2017-09-03T11:23:31Z |

| disk_format | qcow2 |

| file | /v2/images/0749bafc-847e-4808-a566-b3844286bb6c/file |

| id | 0749bafc-847e-4808-a566-b3844286bb6c |

| min_disk | 0 |

| min_ram | 0 |

| name | CentOS_7x64 |

| owner | ea541855636f480f9fabc88d53922410 |

| properties | direct_url='rbd://9c1c1ed7-cbc1-4055-a633-70b32dffad29/glance-images/0749bafc-847e-4808-a566-b3844286bb6c/snap', |

| | locations='[{u'url': u'rbd://9c1c1ed7-cbc1-4055-a633-70b32dffad29/glance-images/0749bafc-847e-4808-a566-b3844286bb6c/snap', |

| | u'metadata': {}}]' |

| protected | False |

| schema | /v2/schemas/image |

| size | 1384972288 |

| status | active |

| tags | |

| updated_at | 2017-09-03T11:24:21Z |

| virtual_size | None |

| visibility | public |

+------------------+--------------------------------------------------------------------------------------------------------------------------------+

[root@os-controller0 ~(keystone_admin)]#

Check on Ceph if the image is created

[root@ceph-mon1 ~]# rbd -p glance-images ls

0749bafc-847e-4808-a566-b3844286bb6c

[root@ceph-mon1 ~]# rbd -p glance-images info 0749bafc-847e-4808-a566-b3844286bb6c

rbd image '0749bafc-847e-4808-a566-b3844286bb6c':

size 1320 MB in 166 objects

order 23 (8192 kB objects)

block_name_prefix: rbd_data.1b5cc4e81bab6

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

flags:

Ceph as Cinder backend for Volumes(block)

Cinder is the block storage service which provides an abstraction for block storage . In Ceph, each storage pool can be mapped to a different Cinder backend. This allows for creating tiered storage services such SSD and non-SSD.

We will create dedicated pool for Cinder. Run below command on ceph-mon1 node

sudo ceph osd pool create cinder-volumes 128

Need to create Ceph client for glance with keyring for authentication

sudo ceph auth get-or-create client.cinder-volumes mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=cinder-volumes, 21`allow rwx pool=glance-images' -o /etc/ceph/ceph.client.cinder-volumes.keyring

Copy the auth keyring to controller and auth key compute nodes

sudo ceph auth get-key client.cinder-volumes |ssh os-compute0.scaleon.io tee client.cinder-volumes.key

sudo ceph auth get-key client.cinder-volumes |ssh os-compute1.scaleon.io tee client.cinder-volumes.key

sudo ceph auth get-key client.cinder-volumes |ssh os-compute3.scaleon.io tee client.cinder-volumes.key

sudo ceph auth get-key client.cinder-volumes |ssh os-compute4.scaleon.io tee client.cinder-volumes.key

Set the appropriate permission on keyring on controller node

sudo chgrp cinder /etc/ceph/ceph.client.cinder-volumes.keyring

sudo chmod 0640 /etc/ceph/ceph.client.cinder-volumes.keyring

edit /etc/ceph/ceph.conf on openstack controller node and add the following lines:

[client.cinder-volumes]

keyring = /etc/ceph/ceph.client.cinder-volumes.keyring

Generate a UUID used for integration with libvirt:

uuidgen

dfab05a7-a868-43c9-8eee-49510d8fd742On the openstack compute nodes, create a new file ceph.xml:

<secret ephemeral="no" private="no">

<uuid>dfab05a7-a868-43c9-8eee-49510d8fd742</uuid>

<usage type="ceph">

<name>client.cinder-volumes secret</name>

</usage>

</secret>

Define a secret on all the compute nodes using ceph.xml file

$ virsh secret-define --file ceph.xml

Secret dfab05a7-a868-43c9-8eee-49510d8fd742 created

$ virsh secret-set-value \

--secret dfab05a7-a868-43c9-8eee-49510d8fd742 \

--base64 $(cat client.cinder-volumes.key)

On OpenStack controller node, edit the file /etc/cinder/cinder.conf add the following configuration

[DEFAULT] enabled_backends = rbd

[rbd] volume_backend_name=rbd

rbd_pool=cinder-volumes

rbd_user=cinder-volumes

rbd_secret_uuid=dfab05a7-a868-43c9-8eee-49510d8fd742

volume_driver=cinder.volume.drivers.rbd.RBDDriver rbd_ceph_conf=/etc/ceph/ceph.conf

Restart cinder-api and cinder-volume services

service openstack-cinder-api restart

service openstack-cinder-volume restart

Lets verify if Volumes are getting created on Ceph

[root@os-controller0 ~(keystone_admin)]# openstack volume list

+--------------------------------------+-------------------+-----------+------+-------------+

| ID | Display Name | Status | Size | Attached to |

+--------------------------------------+-------------------+-----------+------+-------------+

| f4ec72cf-8a06-485f-a5ed-070257e35203 | vol1 | available | 80 | |

| a0d9f11c-a7ed-445d-80e8-d06fe5f3e234 | vol2 | available | 80 | |

+--------------------------------------+-------------------+-----------+------+-------------+

[root@os-controller0 ~(keystone_admin)]# openstack volume show a0d9f11c-a7ed-445d-80e8-d06fe5f3e234

+--------------------------------+--------------------------------------+

| Field | Value |

+--------------------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| consistencygroup_id | None |

| created_at | 2017-08-31T19:48:08.000000 |

| description | |

| encrypted | False |

| id | a0d9f11c-a7ed-445d-80e8-d06fe5f3e234 |

| migration_status | None |

| multiattach | False |

| name | vol2 |

| os-vol-host-attr:host | os-controller0.scaleon.io@rbd#rbd |

| os-vol-mig-status-attr:migstat | None |

| os-vol-mig-status-attr:name_id | None |

| os-vol-tenant-attr:tenant_id | ea541855636f480f9fabc88d53922410 |

| properties | readonly='False' |

| replication_status | disabled |

| size | 80 |

| snapshot_id | None |

| source_volid | None |

| status | available |

| type | RBD |

| updated_at | 2017-09-02T16:37:12.000000 |

| user_id | 62fdde197cb64eb68f42f05474ecb71c |

+--------------------------------+--------------------------------------+

[root@os-controller0 ~(keystone_admin)]#

on Ceph

[root@ceph-mon1 ~]# rbd -p cinder-volumes ls

volume-a0d9f11c-a7ed-445d-80e8-d06fe5f3e234

volume-f4ec72cf-8a06-485f-a5ed-070257e35203

[root@ceph-mon1 ~]# rbd -p cinder-volumes info volume-a0d9f11c-a7ed-445d-80e8-d06fe5f3e234

rbd image 'volume-a0d9f11c-a7ed-445d-80e8-d06fe5f3e234':

size 81920 MB in 20480 objects

order 22 (4096 kB objects)

block_name_prefix: rbd_data.15c54136cf8c

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

flags:

[root@ceph-mon1 ~]#

Ceph as backend Nova for Instance disks (ephemeral)

We will create dedicated pool for Nova Ephemeral. Run below command on ceph-mon1 node

sudo ceph osd pool create nova-ephemeral 128

Need to create Ceph client for nova-ephemeral with keyring for authentication

sudo ceph auth get-or-create client.nova-ephemeral mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=cinder-volumes, allow rwx pool=glance-images, allow rwx pool=nova-ephemeral' -o /etc/ceph/ceph.client.nova-ephemeral.keyring

Copy the auth keyring and auth key to all the compute nodes

scp /etc/ceph/ceph.client.nova-ephemeral.keyring [email protected]:/etc/ceph/

scp /etc/ceph/ceph.client.nova-ephemeral.keyring [email protected]:/etc/ceph/

scp /etc/ceph/ceph.client.nova-ephemeral.keyring [email protected]:/etc/ceph/

scp /etc/ceph/ceph.client.nova-ephemeral.keyring [email protected]:/etc/ceph/

ceph auth get-key client.nova-ephemeral |ssh os-compute0.scaleon.io tee client.nova-ephemeral.key

ceph auth get-key client.nova-ephemeral |ssh os-compute1.scaleon.io tee client.nova-ephemeral.key

ceph auth get-key client.nova-ephemeral |ssh os-compute3.scaleon.io tee client.nova-ephemeral.key

ceph auth get-key client.nova-ephemeral |ssh os-compute4.scaleon.io tee client.nova-ephemeral.key

Set the appropriate permission on keyring on controller node

sudo chgrp cinder /etc/ceph/ceph.client.nova-ephemeral.keyring

sudo chmod 0640 /etc/ceph/ceph.client.nova-ephemeral.keyring

edit /etc/ceph/ceph.conf on all openstack compute nodes and add the following lines:

[client.nova-ephemeral]

keyring = /etc/ceph/ceph.client.nova-ephemeral.keyring

Set appropriate permissions

chgrp nova /etc/ceph/ceph.client.nova-ephemeral.keyring

chmod 0640 /etc/ceph/ceph.client.nova-ephemeral.keyring

Generate a UUID used for integration with libvirt:

uuidgen

2909eaae-657c-4e11-bba8-e4e5504f04a2

On the openstack compute nodes, create a new file ceph-nova.xml:

<secret ephemeral="no" private="no">

<uuid>2909eaae-657c-4e11-bba8-e4e5504f04a2</uuid>

<usage type="ceph">

<name>client.nova-ephemeral secret</name>

</usage>

</secret>

Define a secret on all the compute nodes using ceph-nova.xml file

$ virsh secret-define --file ceph-nova.xml

Secret 2909eaae-657c-4e11-bba8-e4e5504f04a2 created

$ virsh secret-set-value \

--secret 2909eaae-657c-4e11-bba8-e4e5504f04a2 \

--base64 $(cat client.nova-ephemeral.key)

edit /etc/nova/nova.conf file on all the compute nodes with below parameters

[libvirt]

images_rbd_pool=nova=ephemeral

images_rbd_ceph_conf = /etc/ceph/ceph.conf

images_type=rbd

rbd_secret_uuid=2909eaae-657c-4e11-bba8-e4e5504f04a2

rbd_user=nova-ephemeral

Restart Nova compute service on all the compute nodes

systemctl restart openstack-nova-compute

Lets verify if Nova instance disks are getting created on Ceph

[root@os-controller0 ~(keystone_admin)]# openstack server list

+--------------------------------------+-------------------+--------+--------------------------------------------+-----------------------------+

| ID | Name | Status | Networks | Image Name |

+--------------------------------------+-------------------+--------+--------------------------------------------+-----------------------------+

| 59a4241c-a438-432d-9602-3512dfc7e05f | win-jumpbox-2 | ACTIVE | APP_172.16.11.X=172.16.11.16 | Windows_Server_2012R2x86_64 |

| f938898c-c9e7-41b7-aafc-a93c8ffefaab | win-jumpbox-1 | ACTIVE | INFRA_172.16.10.X=172.16.10.20, 10.9.81.3 | Windows_Server_2012R2x86_64 |

| 18bd30a6-0855-45d4-8ed3-6edd732561db | lnx-jumpbox-1 | ACTIVE | INFRA_172.16.10.X=172.16.10.14, 10.9.81.2 | CentOS_7x86_64 |

+--------------------------------------+-------------------+--------+--------------------------------------------+-----------------------------+

[root@os-controller0 ~(keystone_admin)]#

On Ceph

[root@ceph-mon1 ~]# rbd -p nova-ephemeral ls

18bd30a6-0855-45d4-8ed3-6edd732561db_disk

59a4241c-a438-432d-9602-3512dfc7e05f_disk

f938898c-c9e7-41b7-aafc-a93c8ffefaab_disk

[root@ceph-mon1 ~]# rbd -p nova-ephemeral info f938898c-c9e7-41b7-aafc-a93c8ffefaab_disk

rbd image 'f938898c-c9e7-41b7-aafc-a93c8ffefaab_disk':

size 40960 MB in 10240 objects

order 22 (4096 kB objects)

block_name_prefix: rbd_data.ecf9238e1f29

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

flags:

[root@ceph-mon1 ~]#

Drop a comment if you run into an issue or need more information.

Thanks.