How to integrate F5 with Kubernetes for application load-balancing

By Bikram Singh / May 09,2018

Overview

In this blog I will demonstrate how we can use F5 load-balancer with kubernetes to load-balance kubernetes services(applications). Kubernetes has a builtin load-balancer which works out of the box, However enterprises use hardware or software based dedicated load-balancers for performance and advanced load-balancer features.

F5 Kubernetes Integration overview

The F5 Integration for Kubernetes consists of F5 BIG-IP Controller for Kubernetes and the F5 Application Services Proxy (ASP). F5 uses a controller for Kubernetes which is a Docker container that runs on a Kubernetes pod.The BIG-IP Controller for Kubernetes configures BIG-IP F5 Local Traffic Manager (LTM) objects for applications in a Kubernetes cluster, serving North-South traffic. Once the BIG-IP controller pod is running, it watches the Kubernetes API . The F5-k8s-controller for Kubernetes uses user-defined “F5 resources”. The “F5 resources” are Kubernetes ConfigMap resources that pass encoded data to the F5-k8s-controller. These resources tell the F5-k8s-controller:

- What objects to configure on your BIG-IP

- What Kubernetes Service the BIG-IP objects belong to (the frontend and backend properties in the ConfigMap, respectively).

The f5-k8s-controller watches for the creation and modification of F5 resources in Kubernetes. When it discovers changes, it modifies the BIG-IP F5 load-balancer accordingly. For example, for an F5 virtualServer resource, the F5-k8s-controller does the following:

- Creates objects to represent the virtual server on the F5 load-balancer in the specified partition

- Creates pool members for each node in the Kubernetes cluster, using the NodePort assigned to the service port by Kubernetes

- Monitors the F5 resources and linked Kubernetes resources for changes and reconfigures the F5 load-balancer accordingly.

- The F5-loadbalancer then handles traffic for the Service on the specified virtual address and load-balances to all nodes in the cluster.

- Within the cluster, the allocated NodePort is load-balanced to all pods for the Service.

F5 can be used for below purpose

- Application Services Proxy

- F5-proxy(replace kube-proxy) for Kubernetes

- Basic load-balancer (load-balance on NodePort)

- Ingress Controller

Application Services Proxy

The Application Services Proxy provides load balancing for containerized applications, serving east-west traffic. The Application Services Proxy (ASP) also provides container-to-container load balancing, traffic visibility, and inline programmability for applications. Its light form factor allows for rapid deployment in datacenters and across cloud services. The ASP integrates with container environment management and orchestration systems and enables application delivery service automation.

The Application Services Proxy collects traffic statistics for the Services it load balances; these stats are either logged locally or sent to an external analytics application.

F5-proxy for Kubernetes

The F5-proxy for Kubernetes – F5-proxy – replaces the standard Kubernetes network proxy, or kube-proxy. The ASP and F5-proxy work together to proxy traffic for Kubernetes Services as follows:

- The F5-proxy provides the same L4 services as kube-proxy, include iptables and basic load balancing.

- For Services that have the ASP Service annotation, the F5-proxy hands off traffic to the ASP running on the same node as the client.

- The ASP then provides L7 traffic services and L7 telemetry to your Kubernetes Service.

Ingress and Ingress Controllers

The Ingress resource is a set of rules that map to Kubernetes services. Ingress is used to define how a service can be accessed externally. Ingress resources are defined purely within Kubernetes as a object that other objects can watch and respond to.

Ingress Controllers

In order for the Ingress resource to work, the cluster must have an Ingress controller running. This is unlike other types of controllers, which typically run as part of the kube-controller-manager binary, and which are typically started automatically as part of cluster creation. You need to choose the ingress controller implementation that is the best fit for your cluster, or implement one. Ingress controller does work directly with the Kubernetes API, watching for state changes. Ingress controller is a container which runs as a Pod.Ingress controllers will usually track and communicate with endpoints behind services instead of using services directly. and we can also manage the balancing strategy from the balancer.There are few Ingress controllers which are common NGINX ingress controller , Traefik and F5 can also be used as Ingress controller.

Source : clouddocs.f5.com

Lets get started with F5 and kubernetes and see how things work

Prerequisites

- F5 Load-balancer(Virtual/Physical) . I am using virtual edition

- A F5 load-balancer partition needs to be setup for the F5-k8s-controller. one per controller

- User with admin access to this partition on F5 load-balancer.

Kubernetes Cluster components

- Kubernetes v1.7.4

- https://github.com/docker/docker-ce Docker v17.05.0-ce

- CNI Container Networking CNI plugin v0.5.2

- etcd v3.2.6

- https://github.com/projectcalico/calico Calico v1.10.0

- CentOS 7.3 base OS.

- 1x node which is Kube-master and also a kube-worker

kubernetes Cluster info

[root@kube-sandbox ~]# kubectl get nodes

NAME STATUS AGE VERSION

kube-sandbox Ready 1d v1.7.4

[root@kube-sandbox ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"}

[root@kube-sandbox ~]# Labs:

Part of this article I will cover using F5 for Load-balancing on NodePort and Ingress Controller

- F5 as basic load-balancer to load-balance application on NodePort

- We will deploy F5-k8s-controller and use F5 as a load-balancer

- We will deploy NGINX and Tomcat web servers with NodePort exposed via

service. - F5-k8s-controller will create a virtual server on F5 load-balancer to

point NodePort on kube-worker. Kube-worker will be the pool member in

virtual server with NodePort as service port.

First we will create partition in F5 load-balancer called kubernetes because F5-k8s-controller cannot create resources on common partition.

Lets deploy F5-k8s-controller on kubernetes cluster.

If you have RBAC enabled on cluster then we need to first create cluster roles by deploying below yaml

[root@kube-sandbox ~]# cat /f5-controller-rbac.yaml

#for use in k8s clusters using RBAC

#for Openshift use the openshift specific examples

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: bigip-ctlr-clusterrole

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- namespaces

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

- events

verbs:

- get

- list

- watch

- update

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- get

- list

- watch

- update

- create

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: bigip-ctlr-clusterrole-binding

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: bigip-ctlr-clusterrole

subjects:

- kind: ServiceAccount

name: bigip-ctlr-serviceaccount

namespace: kube-system

[root@kube-sandbox ~]# kubectl create -f /f5-controller-rbac.yaml

clusterrole "bigip-ctlr-clusterrole" created

clusterrolebinding "bigip-ctlr-clusterrole-binding" created

Deploy F5-k8s-controller container using below yaml file, note that we need to specify F5 load-balancer IP , partition and user/password . For user/pass we will create a kubernetes secret.

[root@kube-sandbox ~]# kubectl create secret generic bigip-login --namespace kube-system --from-literal=username=admin --from-literal=password=admin

[root@kube-sandbox ~]# cat /f5-controller.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: k8s-bigip-ctlr-deployment

namespace: kube-system

spec:

replicas: 1

template:

metadata:

name: k8s-bigip-ctlr

labels:

app: k8s-bigip-ctlr

spec:

serviceAccountName: bigip-ctlr-serviceaccount

containers:

- name: k8s-bigip-ctlr

# replace the version as needed

image: "f5networks/k8s-bigip-ctlr:1.1.1"

env:

- name: BIGIP_USERNAME

valueFrom:

secretKeyRef:

name: bigip-login

key: username

- name: BIGIP_PASSWORD

valueFrom:

secretKeyRef:

name: bigip-login

key: password

command: ["/app/bin/k8s-bigip-ctlr"]

args: [

"--bigip-username=$(BIGIP_USERNAME)",

"--bigip-password=$(BIGIP_PASSWORD)",

"--bigip-url=10.9.110.80",

"--bigip-partition=kubernetes",

# To manage a single namespace, enter it below

# (required in v1.0.0)

# To manage all namespaces, omit the `namespace` entry

# (default as of v1.1.0)

# To manage multiple namespaces, enter a separate flag for each

# namespace below (as of v1.1.0)

"--namespace=default",

]

imagePullSecrets:

- name: f5-docker-images

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: bigip-ctlr-serviceaccount

namespace: kube-system

[root@kube-sandbox ~]# kubectl create -f /f5-controller.yaml

deployment "k8s-bigip-ctlr-deployment" created

serviceaccount "bigip-ctlr-serviceaccount" created

[root@kube-sandbox ~]#

[root@kube-sandbox ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-policy-controller-2369515389-5mx4h 1/1 Running 0 1d

k8s-bigip-ctlr-deployment-1734878172-hljql 1/1 Running 0 3m

kube-dns-93785515-x2djc 4/4 Running 0 1d

[root@kube-sandbox ~]# kubectl logs k8s-bigip-ctlr-deployment-1734878172-hljql -n kube-system

2017/09/28 06:39:55 [INFO] ConfigWriter started: 0xc42044b080

2017/09/28 06:39:55 [INFO] Started config driver sub-process at pid: 19

2017/09/28 06:39:55 [INFO] NodePoller (0xc420410680) registering new listener: 0x405770

2017/09/28 06:39:55 [INFO] NodePoller started: (0xc420410680)

2017/09/28 06:39:55 [INFO] Wrote 0 Virtual Server configs

2017/09/28 06:39:56 [INFO] [2017-09-28 06:39:56,115 __main__ INFO] entering inotify loop to watch /tmp/k8s-bigip-ctlr.config211427345/config.json

[root@kube-sandbox ~]# Now we have F5-k8s-controller pod running, we will create 2 pods (NGINX and Tomcat) and expose their container port via NodePort using services. we will also create a ConfigMap resource to specify F5 virtual server specific details for F5-k8s-controller so that it can create respective virtual servers on F5-load-balancer.

NGINX

[root@kube-sandbox ~]# cat /f5-nginx.yaml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: f5-nginx

spec:

replicas: 1

template:

metadata:

labels:

run: f5-nginx

spec:

containers:

- name: f5-nginx

image: nginx:1.9.1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: f5-nginx

labels:

run: f5-nginx

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

type: NodePort

selector:

run: f5-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: f5-nginx

namespace: default

labels:

f5type: virtual-server

data:

schema: "f5schemadb://bigip-virtual-server_v0.1.3.json"

data: |-

{

"virtualServer": {

"frontend": {

"balance": "round-robin",

"mode": "http",

"partition": "kubernetes",

"virtualAddress": {

"bindAddr": "10.9.130.210",

"port": 80

}

},

"backend": {

"serviceName": "f5-nginx",

"servicePort": 80

}

}

}

[root@kube-sandbox ~]#

[root@kube-sandbox ~]# kubectl create -f /f5-nginx.yaml

deployment "f5-nginx" created

service "f5-nginx" created

configmap "f5-nginx" created

[root@kube-sandbox ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

f5-nginx-13163279-58zrg 1/1 Running 0 1m

[root@kube-sandbox ~]#

[root@kube-sandbox ~]# kubectl describe svc f5-nginx

Name: f5-nginx

Namespace: default

Labels: run=f5-nginx

Annotations: <none>

Selector: run=f5-nginx

Type: NodePort

IP: 10.32.210.76

Port: <unset> 80/TCP

NodePort: <unset> 32353/TCP

Endpoints: 192.168.54.91:80

Session Affinity: None

Events: <none>

Tomcat

[root@kube-sandbox ~]# cat /f5-tomcat.yaml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: f5-tomcat

spec:

replicas: 1

template:

metadata:

labels:

run: f5-tomcat

spec:

containers:

- name: f5-tomcat

image: tomcat

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: f5-tomcat

labels:

run: f5-tomcat

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

type: NodePort

selector:

run: f5-tomcat

---

kind: ConfigMap

apiVersion: v1

metadata:

name: f5-tomcat

namespace: default

labels:

f5type: virtual-server

data:

schema: "f5schemadb://bigip-virtual-server_v0.1.3.json"

data: |-

{

"virtualServer": {

"frontend": {

"balance": "round-robin",

"mode": "http",

"partition": "kubernetes",

"virtualAddress": {

"bindAddr": "10.9.130.220",

"port": 80

}

},

"backend": {

"serviceName": "f5-tomcat",

"servicePort": 8080

}

}

}

[root@kube-sandbox ~]#

[root@kube-sandbox ~]# kubectl create -f /f5-tomcat.yaml

deployment "f5-tomcat" created

service "f5-tomcat" created

configmap "f5-tomcat" created

[root@kube-sandbox ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

f5-nginx-13163279-58zrg 1/1 Running 0 1m

f5-tomcat-3204334164-wg5ck 1/1 Running 0 1m

[root@kube-sandbox ~]#

[root@kube-sandbox ~]# kubectl describe svc f5-tomcat

Name: f5-tomcat

Namespace: default

Labels: run=f5-tomcat

Annotations: <none>

Selector: run=f5-tomcat

Type: NodePort

IP: 10.32.103.149

Port: <unset> 8080/TCP

NodePort: <unset> 30142/TCP

Endpoints: 192.168.54.92:8080

Session Affinity: None

Events: <none>

[root@kube-sandbox ~]# Look at the f5-k8s-controller logs , it shows 2 virtual server configs are written

[root@kube-sandbox ~]# kubectl logs k8s-bigip-ctlr-deployment-1734878172-hljql -n kube-system

2017/09/28 06:39:55 [INFO] ConfigWriter started: 0xc42044b080

2017/09/28 06:39:55 [INFO] Started config driver sub-process at pid: 19

2017/09/28 06:39:55 [INFO] NodePoller (0xc420410680) registering new listener: 0x405770

2017/09/28 06:39:55 [INFO] NodePoller started: (0xc420410680)

2017/09/28 06:39:55 [INFO] Wrote 0 Virtual Server configs

2017/09/28 06:39:56 [INFO] [2017-09-28 06:39:56,115 __main__ INFO] entering inotify loop to watch /tmp/k8s-bigip-ctlr.config211427345/config.json

2017/09/28 06:49:27 [INFO] Wrote 1 Virtual Server configs

2017/09/28 06:49:35 [INFO] Wrote 2 Virtual Server configs

[root@kube-sandbox ~]#

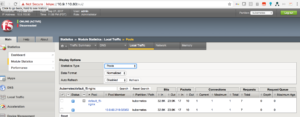

Lets login to F5-load-balancer and verify our virtual servers

You can see above 2 virtual servers are created

Lets access the virtual servers via browser

- F5 as Ingress Controller

We will create an ingress resource to load-balance between NGINX and Tomcat using a single virtual server based on hostname in HTTP request. Ingress Resources provides L7 path/hostname routing of HTTP requests. Ingress listens to the client requests and based on rules defined in ingress resource it sends the traffic to respective backends(services e.g nginx or tomcat).

This example will be a demonstration of Ingress usage. For this lab, we will create in our cluster:

- A backend that will receive requests for nginx.itp-inc.com

- A backend that will receive request for tomcat.itp-inc.com

Below will be the routing based on host headers in HTTP request

nginx.itp-inc.com ====> will be sent to f5-nginx

tomcat.itp-inc.com– =====> will be sent to f5-tomcat

Deploy below F5 Ingress resource

[root@kube-sandbox ~]# cat /f5-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-tomcat-ingress

annotations:

virtual-server.f5.com/ip: "10.9.130.240"

virtual-server.f5.com/http-port: "80"

virtual-server.f5.com/partition: "kubernetes"

virtual-server.f5.com/health: |

[

{

"path": "nginx.itp-inc.com/",

"send": "HTTP GET /",

"interval": 5,

"timeout": 15

}, {

"path": "tomcat.itp-inc.com/",

"send": "HTTP GET /",

"interval": 5,

"timeout": 15

}

]

kubernetes.io/ingress.class: "f5"

spec:

rules:

- host: nginx.itp-inc.com

http:

paths:

- backend:

serviceName: f5-nginx

servicePort: 80

- host: tomcat.itp-inc.com

http:

paths:

- backend:

serviceName: f5-tomcat

servicePort: 8080

[root@kube-sandbox ~]#

[root@kube-sandbox ~]# kubectl create -f /f5-ingress.yaml

ingress "nginx-tomcat-ingress" created

[root@kube-sandbox ~]#

[root@kube-sandbox ~]# kubectl get ing

NAME HOSTS ADDRESS PORTS AGE

nginx-tomcat-ingress nginx.itp-inc.com,tomcat.itp-inc.com 10.9.130.240 80 4m

[root@kube-sandbox ~]# kubectl describe ing

Name: nginx-tomcat-ingress

Namespace: default

Address: 10.9.130.240

Default backend: default-http-backend:80 (none)

Rules:

Host Path Backends

---- ---- --------

nginx.itp-inc.com

f5-nginx:80 (192.168.54.91:80)

tomcat.itp-inc.com

f5-tomcat:8080 (192.168.54.92:8080)

Annotations:

Events: <none>

[root@kube-sandbox ~]#

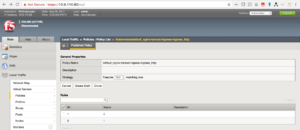

We can see in F5 load-balancer there is virtual server created called default_nginx-tomcat-ingress-ingress_http

I have created a DNS A record for nginx.itp.com and tomcat.itp-inc.com to point to F5 load-balancer virtual server IP(10.9.130.240)

[root@kube-sandbox ~]# ping nginx.itp-inc.com

PING nginx.itp-inc.com (10.9.130.240) 56(84) bytes of data.

64 bytes from 10.9.130.240: icmp_seq=1 ttl=254 time=0.852 ms

64 bytes from 10.9.130.240: icmp_seq=2 ttl=254 time=1.98 ms

^C

--- nginx.itp-inc.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.852/1.420/1.989/0.569 ms

[root@kube-sandbox ~]# ping tomcat.itp-inc.com

PING tomcat.itp-inc.com (10.9.130.240) 56(84) bytes of data.

64 bytes from 10.9.130.240: icmp_seq=1 ttl=254 time=5.51 ms

64 bytes from 10.9.130.240: icmp_seq=2 ttl=254 time=1.89 ms

^C

--- tomcat.itp-inc.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 1.893/3.705/5.517/1.812 ms

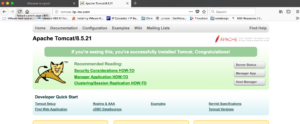

[root@kube-sandbox ~]# Now lets check in browser and see if we can access the webpages

We can see above both the webpages are accessible on a single virtual server based on hostname routing using Ingress.

Hopefully this article will give you some insight about how to integrate F5 with Kubernetes to expose applications/services to external world.

Drop a comment if you have any questions.